TensorRT-LLM

Overview

This guide walks you through installing Jan's official TensorRT-LLM Engine (opens in a new tab). This engine uses Cortex-TensorRT-LLM (opens in a new tab) as the AI engine instead of the default Cortex-Llama-CPP (opens in a new tab). It includes an efficient C++ server that executes the TRT-LLM C++ runtime (opens in a new tab) natively. It also includes features and performance improvements like OpenAI compatibility, tokenizer improvements, and queues.

This feature is only available for Windows users. Linux is coming soon.

Pre-requisites

- A Windows PC.

- Nvidia GPU(s): Ada or Ampere series (i.e. RTX 4000s & 3000s). More will be supported soon.

- Sufficient disk space for the TensorRT-LLM models and data files (space requirements vary depending on the model size).

Step 1: Install TensorRT-Extension

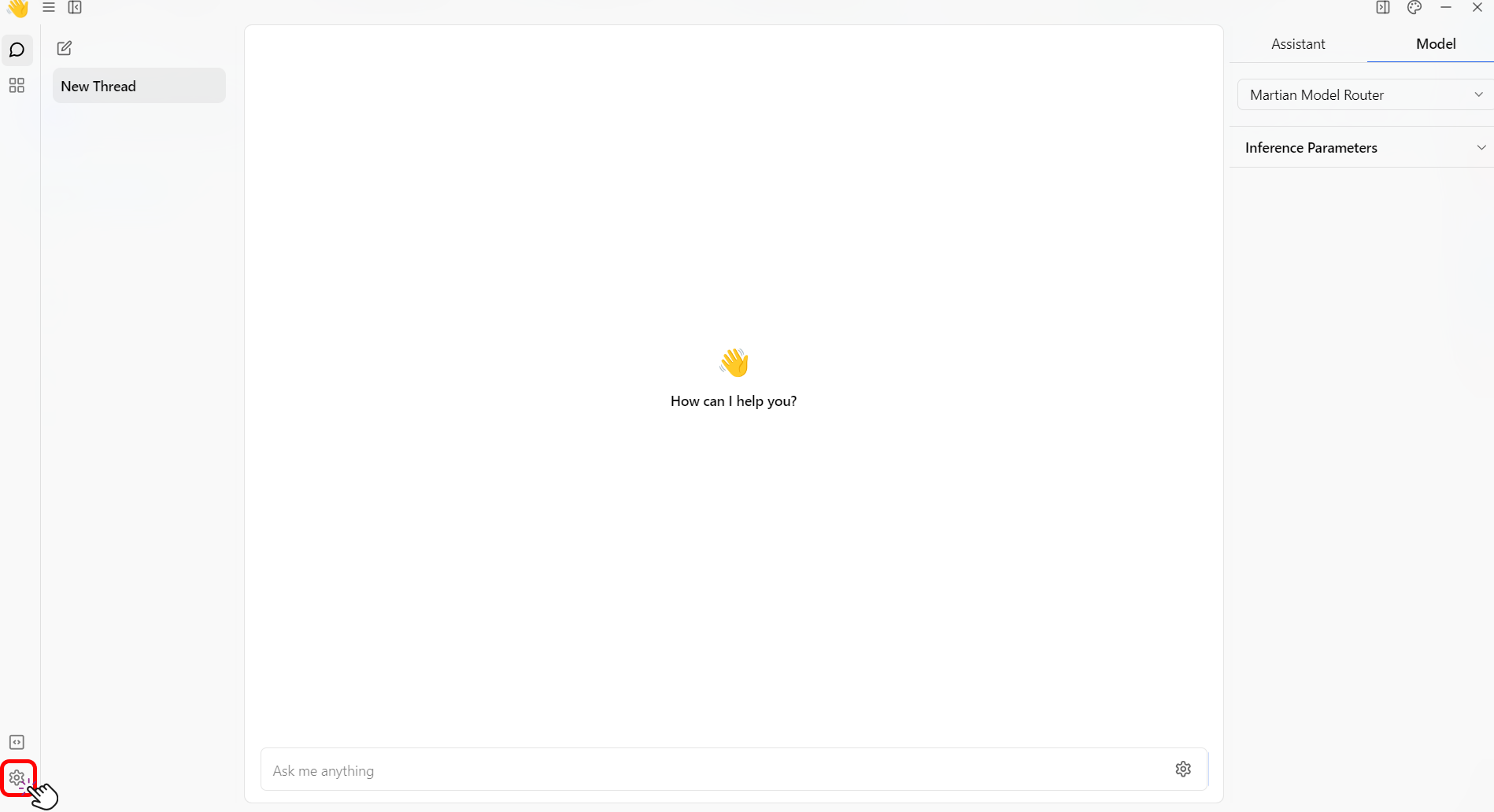

- Click the Gear Icon (⚙️) on the bottom left of your screen.

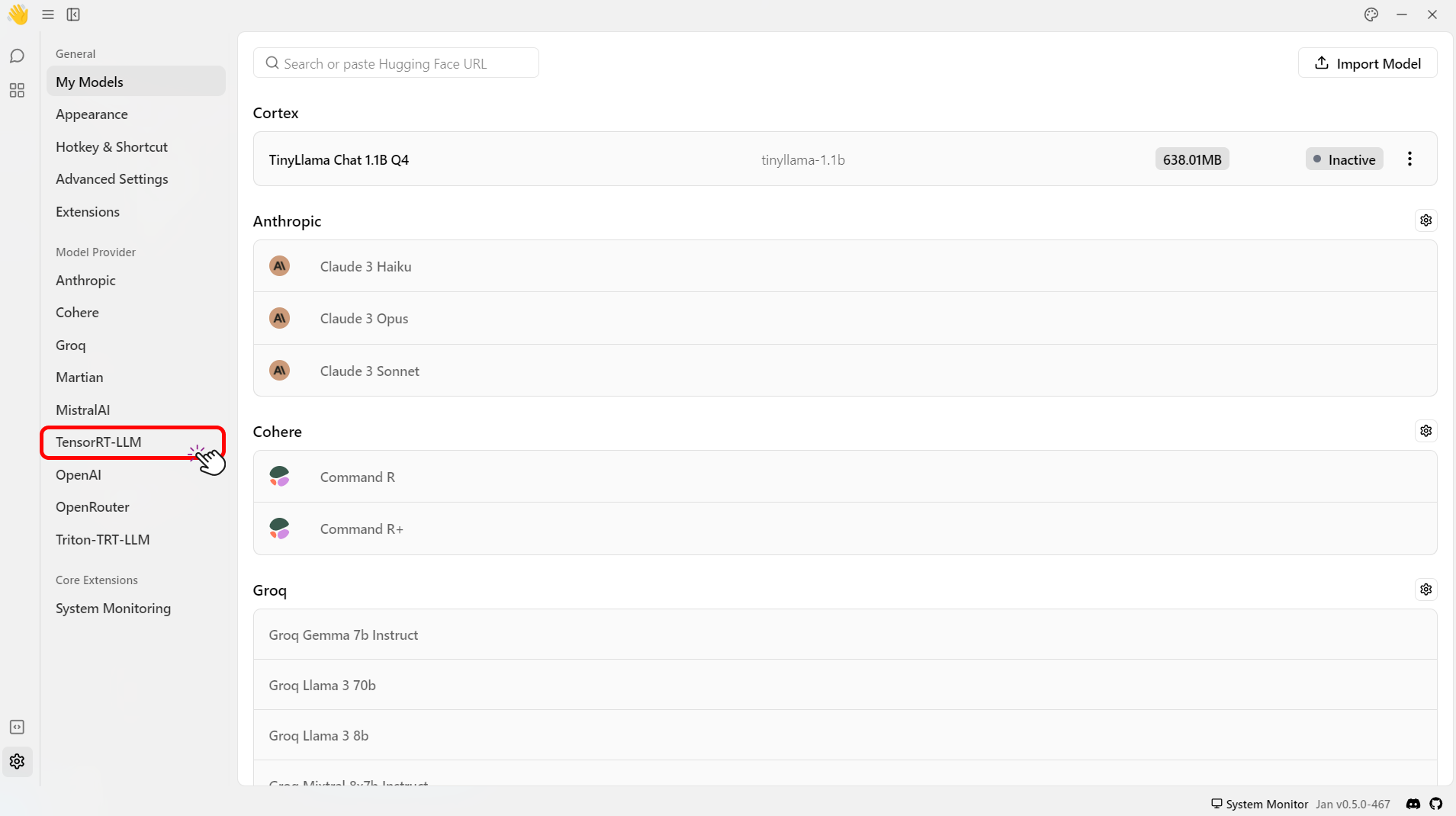

- Select the TensorRT-LLM under the Model Provider section.

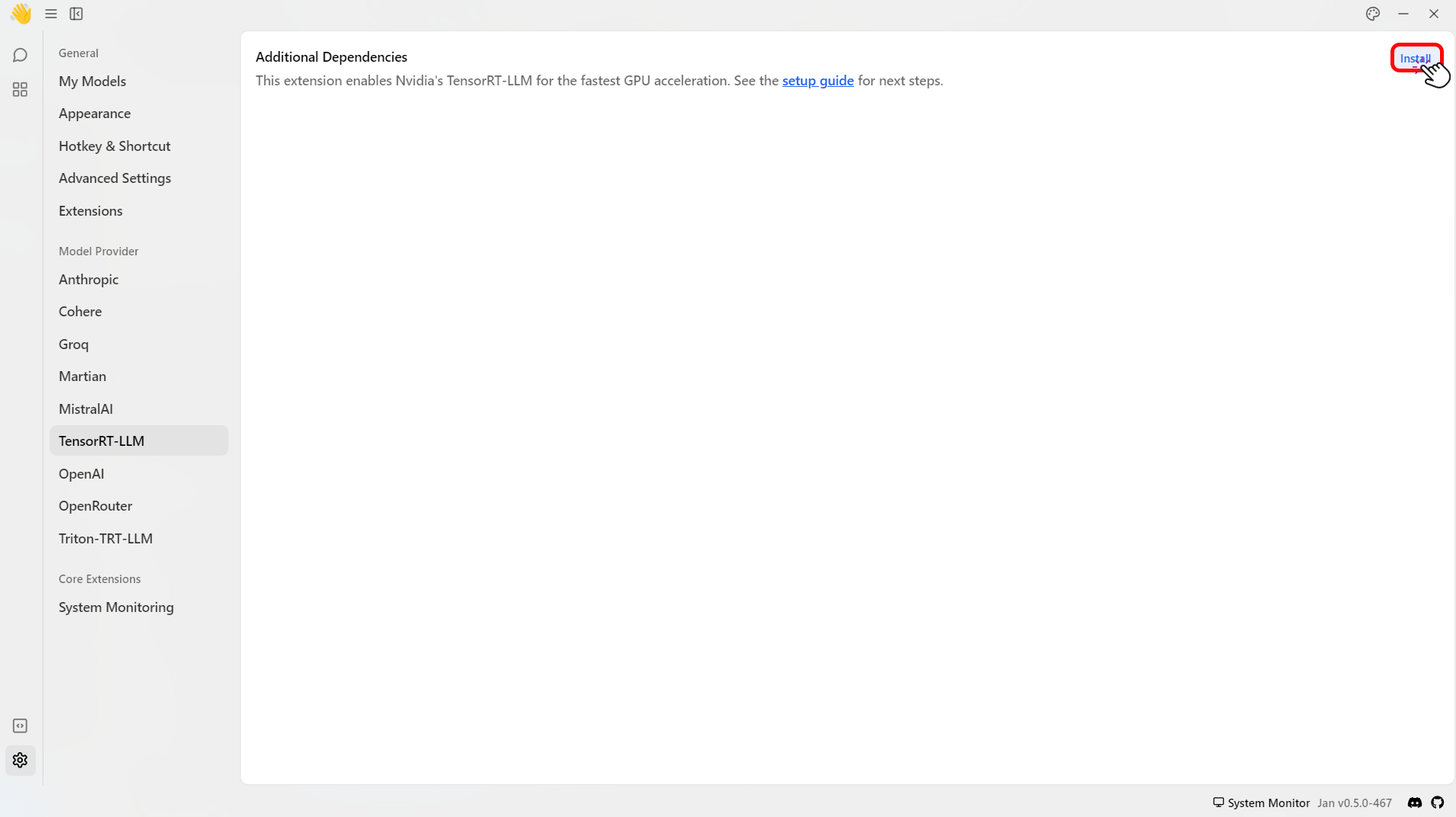

- Click Install to install the required dependencies to use TensorRT-LLM.

- Check that files are correctly downloaded.

ls ~/jan/data/extensions/@janhq/tensorrt-llm-extension/dist/bin# Your Extension Folder should now include `nitro.exe`, among other artifacts needed to run TRT-LLM

Step 2: Download a Compatible Model

TensorRT-LLM can only run models in TensorRT format. These models, aka "TensorRT Engines", are prebuilt for each target OS+GPU architecture.

We offer a handful of precompiled models for Ampere and Ada cards that you can immediately download and play with:

- Restart the application and go to the Hub.

- Look for models with the

TensorRT-LLMlabel in the recommended models list > Click Download.

This step might take some time. 🙏

- Click Download to download the model.

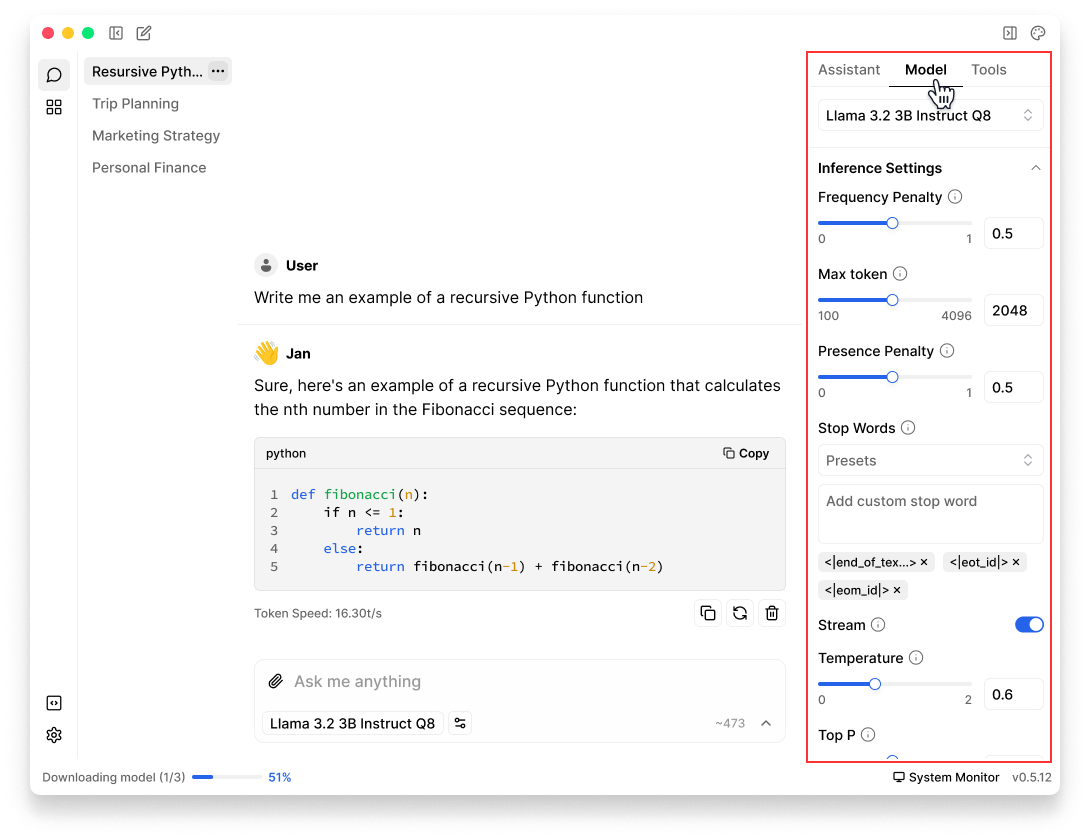

Step 3: Configure Settings

- Navigate to the Thread section.

- Select the model that you have downloaded.

- Customize the default parameters of the model for how Jan runs TensorRT-LLM.

Please see here for more detailed model parameters.